Sparse train

Fast neural network training by dynamic sparsification

Fast implementation of PruneTrain: Fast Neural Network Training by Dynamic Sparse Model Reconfiguration to reduce training time.

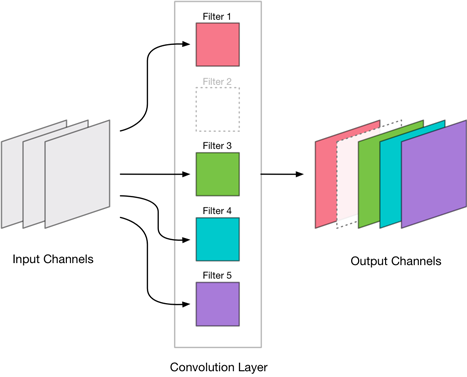

Modify the model architecture during training by removing entire convolutional layers for GPU mechanical sympathy, see here for details.

Example of convolution layer channel pruning (source)

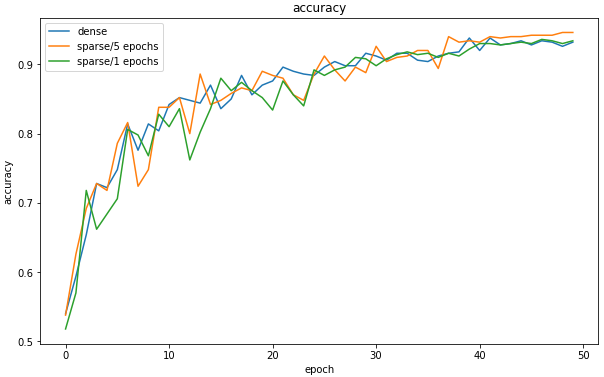

Sparse models trained from scratch preserve classification performance.

A smaller model is created as a by-product of sparse training. This is an improvement over other methods which re-train a pre-trained model to induce sparsity and compress it.

The sparsified model has lower parameters and resources requirements, so it is more feasible to deploy it on hardware with limited memory. Also, more instances can then deployed in parallel in a production environment, allowing for greater scalability.